PyPi, the Python Software Foundation's package repo, counts over half a million open source projects. Since I use many of these every day, it seemed appropriate to get to know this set of packages better, and show some appreciation. The index website provides nice search and filtering, which is good when looking for something specific. Here though, we want to take a look at every package at once, to construct a visualization, and perhaps even discover some cool new packages.

To visualize the set, then, we need to find out its structure. Luckily PyPi provides a nice JSON API (see here for numpy's entry for instance) and even luckier, there is a copy on BigQuery so that we don't have to bother the poor PyPi servers with >600,000 requests.

One SQL query later, we have a .jsonl of all the metadata we want. So what metadata do we want? Since we want to uncover the internal structure of the dataset, we focus on the defining feature of open source and look at the dependencies of each package. This gives a natural directed graph topology. For once, dependency hell is actually helpful!

Half a million nodes is a lot for an interactive graph - good motivation to look at the data more closely. As all big datasets, the BigQuery mirror is messy, containing many not-so-classic Python packages like "the-sims-freeplay-hack", "cda-shuju-fenxishi-202209-202302" and other collateral. These seem to have been detected and taken down by PyPi, because they don't have a package website. To get down to a reasonable sized dataset, we therefore filter for packages where some important columns aren't null. This gets us down to around 100000, so we somewhat arbitrarily filter for packages with more than 2 dependencies (and let them fill us in on the packages that they depend upon) for a smaller test dataset. We use all dependencies, including experimental, development and testing ones.

Graph layouts are a classic computer science problem, and we can use handy software designed exactly for this kind of task, like Gephi. This lets us use an algorithm of our choice, and after playing around with a few, I find that the default Force Atlas 2, an iterative spring-energy minimization, does the best job. This was expected! (This amazing talk by Daniel Spielman will convince you to love force-directed graph layouts, if you don't yet.)

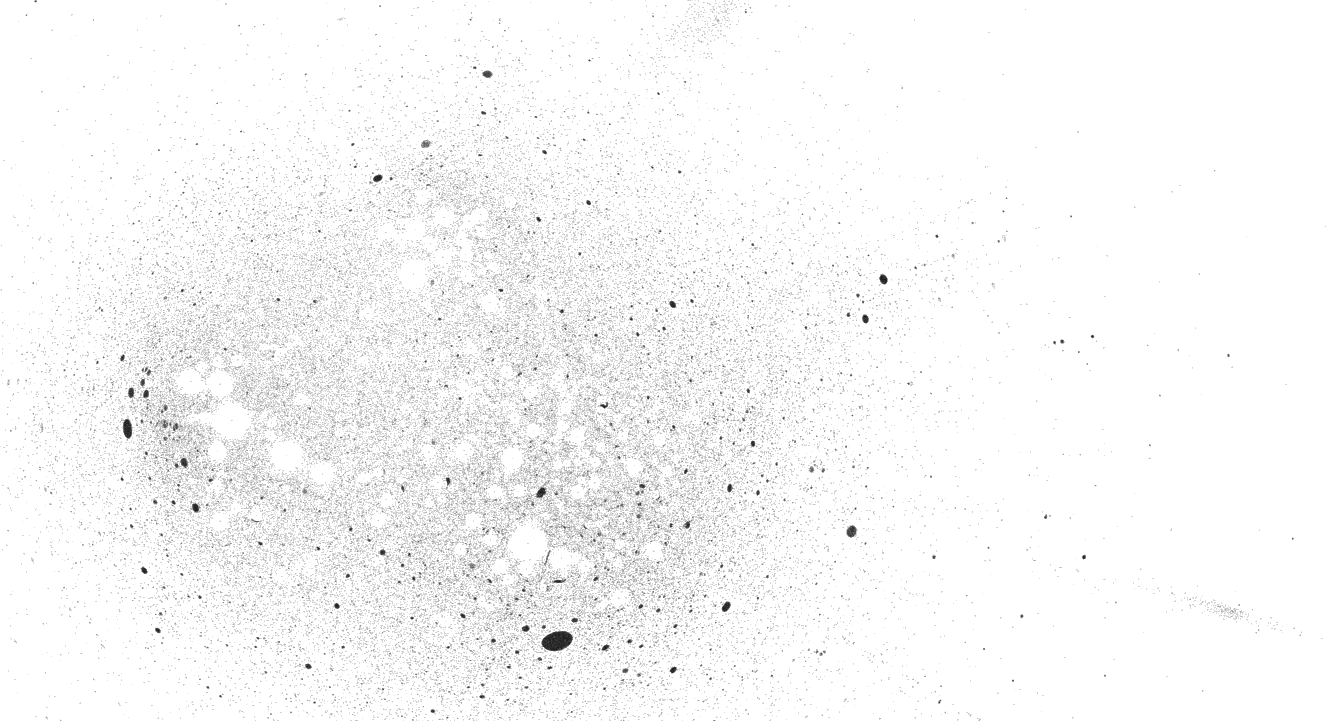

Here is the interactive graph:

I would have expected a very tightly knit cloud centering around the most common packages as the result, with little clustering. This is more or less what happens - two dimensions are just not enough to capture the neighborhoods of a highly connected graph. The mean degree is 4.97. But that is not the whole story.

We obtain clusters of packages that depend on the same set of packages. Some are innocent: Only depending on numpy for example is a very good thing, in fact I wish that cluster was bigger. Another cluster though, it contains only packages depending on "peppercorn", "check-manifest" and "coverage". In there, we find packages with names like "among-us-always-imposter-hack". Good job for passing the previous filter I suppose! These are copied from a template python package called pqc, and were uploaded uninitialized. Those with obviously spammy names are taken down, but some weird ones remain. A subcluster of 10 packages named variations of "python-smshub-org" sits in there since an upload in May 2019. As far as I can tell they no currently online packages contain malicious code, but I feel this is a proof of concept that graph drawing can find anomalies. Neat!

Some organizations generate a lot Python packages. An enterprise software miscellanea company called Triton, for instance, puts out over 300 packages with their name in it. They all depend on the same base package and are thus visualized close together. Perhaps the biggest one is another enterprise software company, Odoo, whose main package has over 3000 child packages. Similar groupings include a data pipeline company called Airbyte with 320 packages, the Objective C bridge PyObjC with 167 packages and the content management system Plone. A corporate API client called aiobotocore apparently uses 421 packages only for its types.

The energy-based layout also finds recognizable semantic neighborhoods. Some, I know better, such as north of numpy, where scikit-learn, seaborn and tensorflow are hanging out. Others less, like the region around cryptography. This is already a nice way to window shop some packages, but I am very sure that this only scratches the surface of this dataset. Some further steps would be to visualize recursive dependency trees nicely, improve performance, and add search.

Edit: Luckily, as suggested by a reader, a more mature version of this concept with better UI exists, available here.

For replicating this, see the accompanying repository.