Computing e with a Needle

In 1812, Pierre-Simon Laplace founded a new discipline in mathematics: Computing transcendental numbers with pointy things. His remark that you can figure out π experimentally with Buffon's needle problem has earned itself a special place in many mathematicians' hearts over the years. Today, we want to exercise ourselves in this charming sport as well and calcute Euler's number - with a needle. Lovely, no?

And it really is a people's sport: Contrary to popular opinion, computing transcendental numbers is not difficult at all. Just follow these simple instructions:

- Throw a needle on the ground. The more spin the better!

- Measure the rotation of the needle, as a value between 0 and 1. That is, if you use degrees, divide your measurement by 360°. You may predetermine any straight line as your reference.

- Repeat Step 1 and add your measurements until the sum is larger than 1. Record your final sum!

- Reset your sum to 0 and repeat Step 1, until you run out of patience.

- Average your recorded numbers. Multiply by 2. Done!

(Note that I am not responsible in the very likely event that you will injure someone during this calculation.)

If you have a lot of patience, your answer is exactly Euler's number e!

Let's try to prove that this actually works:

We model the needle throws as a family of independant, identically distributed random variables \( X_1, X_2, X_3, ... \). Since they are well spun, the rotation will be uniformly distributed between 0 and 1. Now we define our sum \(S_n = \sum^n_{k=1} X_k\) and a stopping time \( N = \inf \{n | S_n>1 \} \). The numbers that will be recorded are realizations of the stopped sum

$$ S_N := \mathbb 1 _{\{ n < N \}} \cdot X_n + \mathbb 1 _{\{ n \geq N \}} \cdot X_N $$

We want to show first that for the expected value of the stopping time:

$$ E(N) = e $$

, which is where Euler's number already surfices. Note that this implies a second method of calculation, where instead of recording the sum, we simply note the number of throws until we reach 1.

The formula for the expected value that's widely known is

$$ E(X) = \sum^n_{k = 0} P(X = k) \cdot k$$

but there is a second one, in our case of \( X \geq 0 \).

$$ X = \int^X_0 d\lambda = \int^\infty_0 \mathbb 1_{\{ X \geq \lambda \}} d\lambda$$

$$\Rightarrow E(X) = \int^\infty_0 P(X \geq \lambda) d\lambda$$

so for our discrete \( N\):

$$ E(N) = \sum^\infty_{k=1} P(N \geq k) = \sum^\infty_{k=0} P(N > k)$$

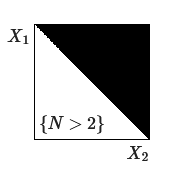

The event \( N > k \), that we record the sum after more than two throws, has a very pretty interpretation. To see this, let's sketch \(\{ N > 2 \}\) as a subset of \( im(X_1, X_2) = [0, 1]^2\). We can do this because we can judge whether the event has happened by the first two throws already.

If you would like to check whether you understand the picture, try doing the same with \(\{ N > 3 \}\).

What we find is that \( N > k \) is equivalent to a shape in the k-dimensional unit interval: \( \{x \in \mathbb R^k | \sum^k_{j=1} x_j < 1 \} \). Since we're dealing with uniform distributions, we weigh this shape with the Lebesgue measure:

$$\Rightarrow P(N > k) = \lambda^k( \{x \in \mathbb R^k | \sum^k_{j=1} x_j < 1 \} )$$

You may notice that this is not any old shape, but the famous k-dimensional unit simplex! Here we take the opportunity to prove a slightly more general fact to find out the volume:

$$\lambda^k( \{x \in \mathbb R^k | \sum^k_{j=1} x_j < c \} ) = {c^k \over k!}$$

which we can verify via induction and the wonderful Principle of Cavalieri. We slice every k+1-dimensional shape into little k-dimensional ones and weigh them with our formula. The induction step works like this:

$$ \lambda^{k+1}( \{x \in \mathbb R^{k+1} | \sum^{k+1}_{j=1} x_j < c \} ) = \int_0^c \lambda^{k}( \{x \in \mathbb R^{k} | \sum^{k}_{j=1} x_j < c - t \} ) d\lambda(t)$$

$$ = \int_0^c {(c-t)^{k} \over k!} dt = {c^{k+1} \over (k+1)!}$$

Thank you, Mr. Cavalieri! Now we understand that:

$$E(N) = \sum^\infty_{k=0} P(N > k) = \sum^\infty_{k=0} {1 \over k!} = e $$

What remains interesting is that our original algorithm also has something to do with Euler's number, namely we empirically find that somehow \( E(S_N) = \frac e 2 \). It's an application of Wald's theorem, a treasure of probablity theory that says

$$E(S_N) = E(N) \cdot E(X_1)$$

for any i.i.d \(X_i\), an independant \( N\) taking natural numbers as values and \(S_n\) like we constructed it. So we get the answer by simply noting that for us, \( E(X_1) = {1 \over 2} \). Neat!

In conclusion, if we repeat the experiment \(S_N \) often, the average converges almost surely to its expected value \(\frac e 2\) by the strong law of large numbers! Happy throwing!

Note

For my treasured readers afflicted by haemophilia or other conditions that render them unable to throw needles around, let's provide a semiconductor-based version after all. This one is Python:

import random

tries = 1000000 # This quantifies the participant's patience

average = 0 # This average is the one

# we used in the above demonstration

average_n = 0 # This is the one we noted in the proof

results = []

for i in range(tries):

sum = 0

n = 0

while sum < 1:

n += 1

sum += random.random()

average += sum

average_n += n

# print(2 * sum) # Here we can print

# out each individual recorded sum.

average /= tries / 2

average_n /= tries

print(f"E is apparently {average}.")

print(f"E is also apparently {average_n}.")

Or you can use this cute little command line tool written in C, if you have a compiler on hand.